Last year, we had a client who needed a test bench solution. The client is excited about AI. He has finished a elaborate program on machine learning himself. He now understands machine learning algorithms, neural networks, cost function, weights, biases, optimizers, data engineering and lot more.

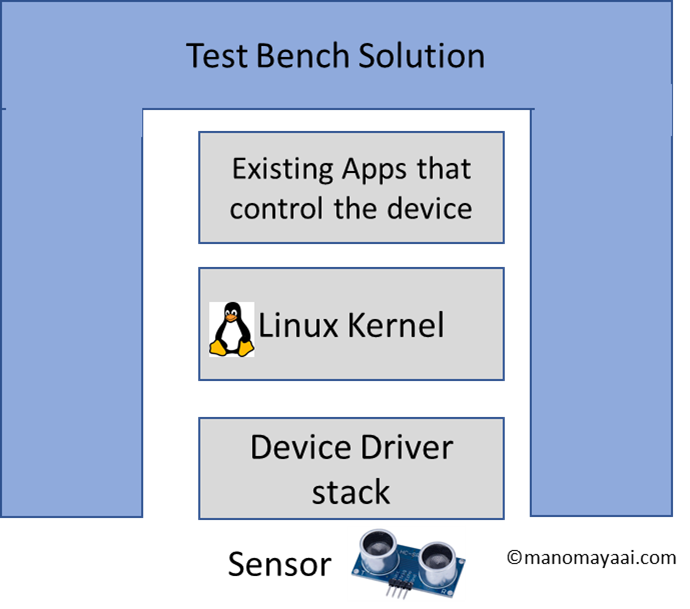

The project was a test bench solution involving complex embedded hardware. The users are the testers. They are domain experts too. Main focus was performance of testing a particular signal. I won’t reveal whether it was a multimedia, biometric sensor, a simple sensor or some other complex sensor. The signal comes ‘up’ to the test logic of the test bench through complex layer of drivers.

I have nothing surprising in the diagram to show you. Test bench solutions tap inputs from multiple levels. Then they are used in the test logic to fulfil the needs of the test case. On this basic need, further features like reporting, import/export etc. are added to the test solution.

Here comes the AI. The AI enthusiast product owner proposes inserting AI into the solution, the senior management is skeptical. Sure. They have pressure to show something on AI for the annual report. But they also want real value out of the investment.

The issue with many evangelists of AI is that they talk too much about AI than about the solution and the specific value coming from AI.

Continuing with our story, the product owner somehow gets the approval for AI based tests project. It was only the beginning.

The Challenges

As the AI based tests were developed, the product experts start raising questions. They question both the reliability and value out of AI. The domain experts know a lot. They can explain how exactly current system works. When something does not work, they can pinpoint the issue. Sometimes, too much knowledge also results in rigidity. It may make them oppose anything new. More over, AI seems like enigma. With code, it is so easy to go through and understand the logic.

In our case, product owner, has two challenges- 1. Find good answers to the objections raised. 2. Influence the experts. If it is not possible, convince the management against the expert opinion.

Finding good answers to the AI value and the reliability needs a good team. If your AI team is obsessed only with machine learning, then there is a problem. In this case, we spent lot of time doing non-AI solution. We almost became domain experts. It was possible because we were not just into AI technologies. We indeed were a systems and solution team than a bunch of ML-fanatics.

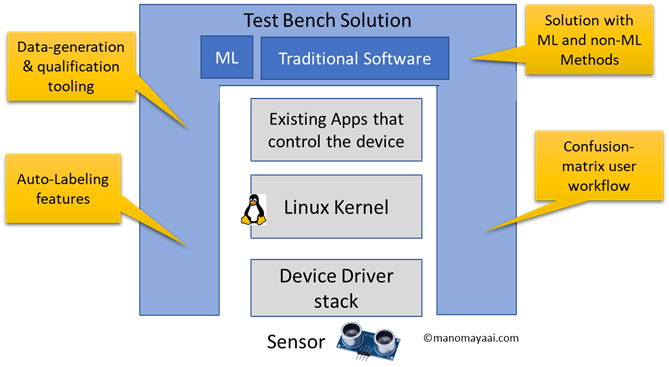

The specifics of the solution is irrelevant. Key point is that the product experts and und users could see AI and non-AI results side by side. There was no reason to object. Their demands of having traditional solution is taken care. As bonus they could see AI solution too. Over time, they got used to seeing the benefits. The resistance gradually faded away.

The Solution

Both the challenges were taken care because the AI team worked as system engineering team that cared about solution first. It had the perspective from sensor, device driver and all the way until the application level micro services. It also cared enough to define the work flow of gathering data and semi-automation of labeling.

I am surprised that many data scientists see ML as exclusive distinct solution instead of seeing it as one of the building blocks of the overall solution. For effective AI, the business workflow, user workflow and the solution development workflow are well thought through.

Any AI project shall be seen as full fledged system engineering project that integrates many different workflows. Seeing it only as demonstration of data science competencies could lead to failure. This would do harm to data science initiatives across organization, not just in one project.

Give confidence that data science team is here to solve a problem, not just for the sake of data science. It goes a long way in gaining acceptance.